When Anyone Can Build Anything

We will be spared our disillusion

DeNiro: <looks at ronin figurine display>

Lonsdale: My hobby.. one grows old..

DeNiro: I knew a lotta fellas, friends of mine, who just wanted to live to open a bar.

Lonsdale: Had they lived would they have done it?

DeNiro: <smirk>

Lonsdale: Then they were spared their disillusion.

DeNiro: That's right.

I have been running a lot more Claude Code lately, usually inside of Cursor because I still need an IDE. I flipped over to my API credentials for a bit to see how the prices looked. And I built out an entire new database table structure, model, migrations, and complete UI for just a few bucks. A little more compacting and clearing of the context would have made it cheaper (we’re all still learning these tools).

I frequently hit usage limits, which means it's time for an upgrade. And yet I'm annoyed. I'm spending a few bucks to get a ridiculous amount of things done and I've gotten so used to it that I'm complaining. $20 or $100 or $200 are all ridiculously cheap prices for high quality code output. But they feel expensive.

And that's because I’m like a 20th century photographer with a modern digital camera. I’m still planning to be bathed in red light for hours developing each frame of film. I haven’t internalized the new workflow — thousands of photos in a session and easy processing. This has been my livelihood for years, I am too used to meticulously typing every piece of syntax.

But writing code is on a new path now. Software is a fundamentally virtual good. Like writing, it has no real physical equivalent, just pure abstraction riding on strands of thought. And now thought — all of intelligence — is in the same deflationary spiral as a lot of other modern goods.

The marginal cost of creating software is rapidly going to zero.

I am wrestling with this, we all are, but there are pragmatic considerations too. How do I avoid per-token costs and usage limits on giant cloud infrastructures? Because as the cost goes down and the intelligence gets better, the number of tokens I'll want in the future is definitely going to be orders of magnitude larger than what I have now.

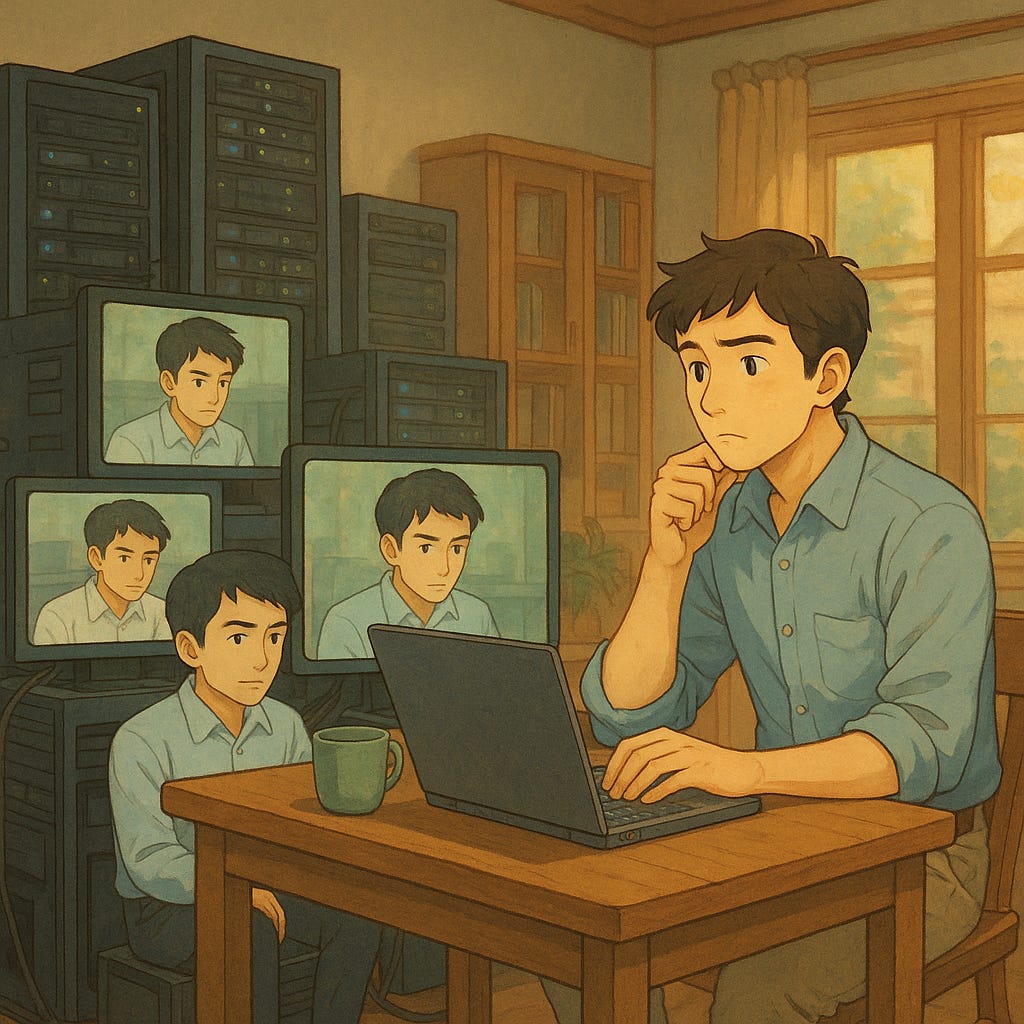

The answer is, of course, own the hardware. So as a thought experiment, I decided to spec out a future rig1. And I learned that within a couple of years I’ll have what amounts to a datacenter of excellent engineers working in my house. These engineers are not messy, fretful, unfocused humans. They are diligent, studious, and exceptionally fast. They'll work 24-7 on whatever I point them at.

This setup will cost what a nice car costs. It will pay for itself in a couple of months, and I’ll measure tokens on my energy bill. Then I'll have to face the real question: with an abundance of tireless genius at my disposal, what am I going to build? What excuse will I have when I don't?

If you can code at all, you will eventually run across a conversation with a friend or acquaintance that looks like this:

"So you can write websites right?"

"Yes..."

"Man, I have this great idea I've been thinking about for awhile! Here it is.. I just need someone who can code to help get it off the ground. I know it will be worth billions, so hey! Why don't we build it together!"

This inevitable conversation leaves the coder disillusioned and the asker deflated. The problem is that everyone is in love with their own ideas, but not so in love with them that they're willing to go learn to code and do it themselves. It's too costly. But the cost is not measured in effort, or time, or even money.

The cost is having to realize how bad the idea was in the first place.

The coder has already done this. They have suffered through the pain and fire and demoralization of trying to get a simple fucking string to print and “dear God why won’t the loop just work” and “who wrote this syntactic drivel?” and “oh wait that was me three days ago, I am so bad at this”. And now here we are in this brave new world where the marginal cost of any software implementation is going to zero. Our trials may become worthless. Our scars and built-up mental muscles are being replaced by faster, cheaper silicon. “Real programmers” wear that suffering like a medal. And now some kid with Claude can build in an afternoon what used to take weeks. The war stories are irrelevant. The blood and sweat are irrelevant. The injustice of this is irrelevant. What matters is output.

We must get used to this: the marginal cost of most intelligence is moving towards zero.

Now the real differences will be revealed. Everybody has a grand idea. Everyone has the important thing they might get to, someday. That’s our dream: someday. It’s our fairweather maiden waiting for us just over the horizon of all the important things we need to do right now. The problem is our ships are suddenly faster. Our sails are always full and the horizon that was once a drunken mirage is suddenly within our grasp. And crumbling.

None of us are going to be spared our disillusion. Our ideas are going to be implementable in ways we've never seen before. If we have an idea for something, we can test it out and see if it's good. We can do it in days instead of years. Possibility is drowning us where once we hid behind the dream. It is a simple step to articulate an idea. And only our will is in the way of driving a silicon army to execute.

What does this world look like? What happens when everybody can take their napkin sketches and start narrating their creation?

Most of those napkins will burn. The execution will still be clunky and difficult, the market indifferent, and the problems will turn out to be irrelevant. But this is the thing about cheap intelligence — you get to find out. There is no longer any excuse for the comfort of our speculation about what might be. You build it, you ship it, you watch it fail or flourish.

I've been watching friends run small businesses using AI to wire together brittle systems that mostly work, the same way Excel has been used for decades. Serious browser tab fatigue, jumping from tool to tool, nothing polished. But when you build for yourself, your feedback loop is instant. Their systems work for them, and that's enough.

This is going to become the default: not buying polished software that somewhat fits your needs, but building rough systems that fit perfectly. Overall software capability shifts to systems thinking and interfaces. What matters is context, and you can specialize your own agents and systems as much as you like.

"If you want to build a ship, do not drum up the men to gather wood, divide work and give orders. Instead, teach them to yearn for the vast and endless sea"

— Antoine de Saint-Exupéry

Now everyone has their own ship and the sea is crowded with solo sailors following their own navigation. When everyone can do their own thing, convincing people to work on your thing becomes exponentially harder. The winners will be compelling enough to pull talent away from personal projects.

When capability is universal, vision is everything.

We're losing our excuses. Sidney Sheldon said that "A blank piece of paper is God's way of telling us how hard it is to be God." Now we’re learning that it isn't just about how hard it is to create, but how hard it is to decide what to create.

The machines can build now. But there’s no story behind the building. They can’t tell you why you should care. They can't convince someone to abandon their own ship to help with yours. They can't explain why this thing and not that thing deserves to exist.

We must still create the narrative for these tools, just as narratives have been created for us. The constraint isn't capability anymore. This new technology demands old virtues: wisdom to know what's worth creating, storytelling to make others believe it matters too.

What deserves to exist? Why should others pay attention? What story makes this matter?

None of us are going to be spared our disillusion. But we also won’t be spared our chance to find out.

If you’re wondering about the details of a future hardware spec, here’s what I came up with. People are running Qwen and now OpenAI Open Weights models on M3 laptops. You can buy a GPU for $2-3k now and NVIDIA is prototyping a cutting edge Grace Blackwell GPU in a consumer box called DGX Spark.

2-4 DGX Spark GPU nodes, each with a petaflop and 128-256 GB RAM. Onboard fast storage for models.

Fast 100Ge local network fabric. This will probably get baked into a prosumer solution

Any old Mac laptop for the human machine.

Setup a planning agent that can spawn multiple implementation agents. Tune the reasoning knobs as needed.

Manage the context windows appropriately and enjoy over 1,000 tokens/second (minimum) across agents.

That’s 80-90 million tokens per day, at the limit, or $500-$1000 in tokens.

Even factoring in your increased power footprint — about an additional 1800 kWh/month — that setup would pay for itself (in tokens) in a couple of months for a power user.

There will always be a case for using frontier models in huge data centers, for both training and inference. But only when necessary, and as we are learning, for a lot of problems intelligence is not the primary constraint. So I don't need beyond Nobel-prize winning PhD or Riemann-hypothesis solvers for many things. There will also be new paradigms and a “menagerie of models”, as Greg Brockman likes to say. There is sooooo much coming.